Tech.LGBT

Information Site for the tech.lgbt Fediverse Instance

Report: Mastodon Moderation Transparency

by tech.lgbt Moderation Team

First, thank you to the patience of everyone who has responded to the survey. Many of you indicated that you would like to be notified when survey results were released. It has taken some time to review the results, draw conclusions, and determine some next steps and suggestions for moving forward.

This report goes over key findings in the survey, some personal insights and interpretations, concerns over tensions in transparency expectations, and recommendations for moderation reporting. The raw data of the survey is also included at the end of the report.

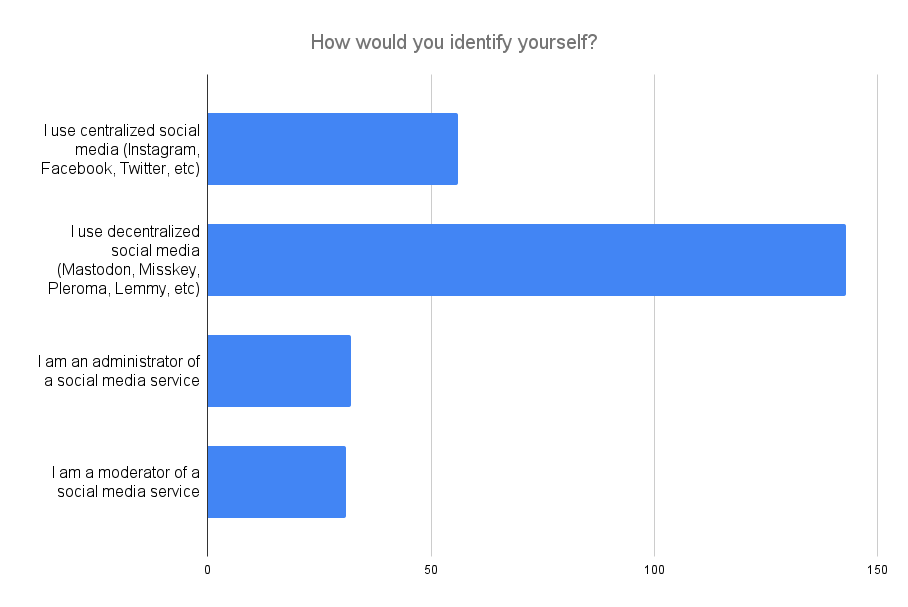

The survey that this report is based on was performed by @david@tech.lgbt via their personal Mastodon account from 30 December 2024 to 31 January 2025. This was done on a self-hosted Cryptpad form, with the intention of anonymizing respondents for trust and safety purposes.

Key Findings:

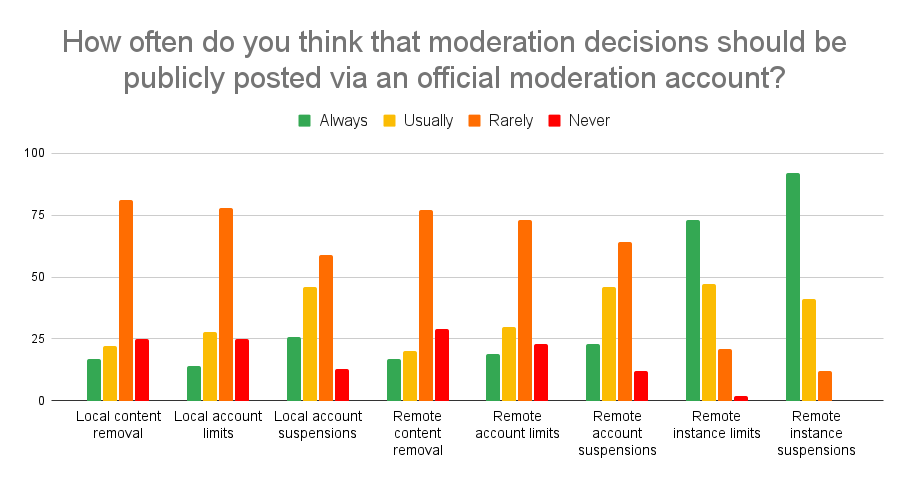

1. Public Posting of Moderation Decisions:

Respondents strongly favored transparency, especially for remote moderation actions, mainly instance suspensions.

Decisions affecting access of remote instances were considered more critical to publicly disclose compared to local ones, suggesting a higher accountability expectation when moderation decisions impact the connections to external communities.

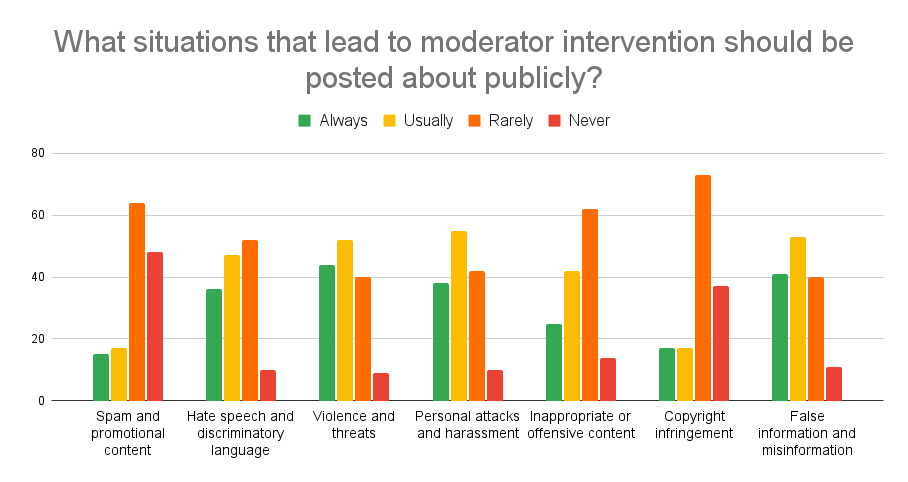

2. Situations for Public Disclosure:

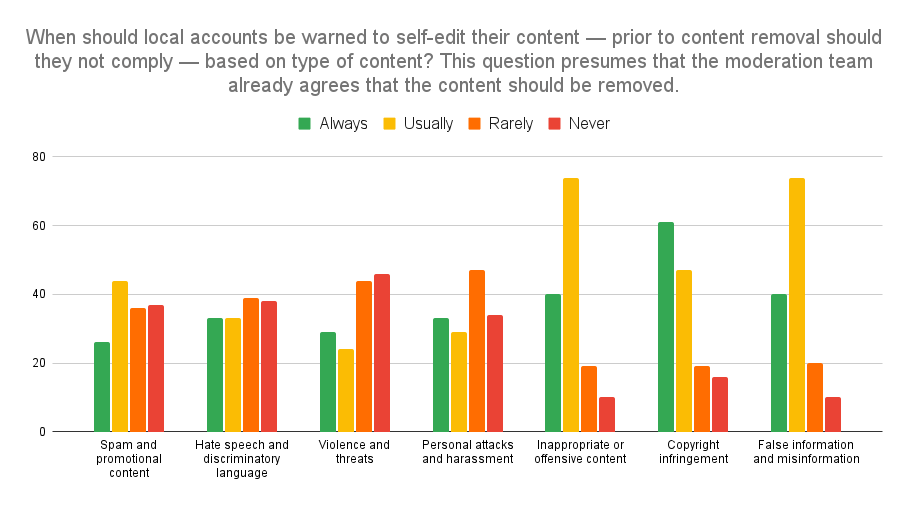

High-priority categories for public moderation announcements included violence/threats, personal attacks, harassment, hate speech, and misinformation.

Spam, copyright infringement, and inappropriate content received lower priority.

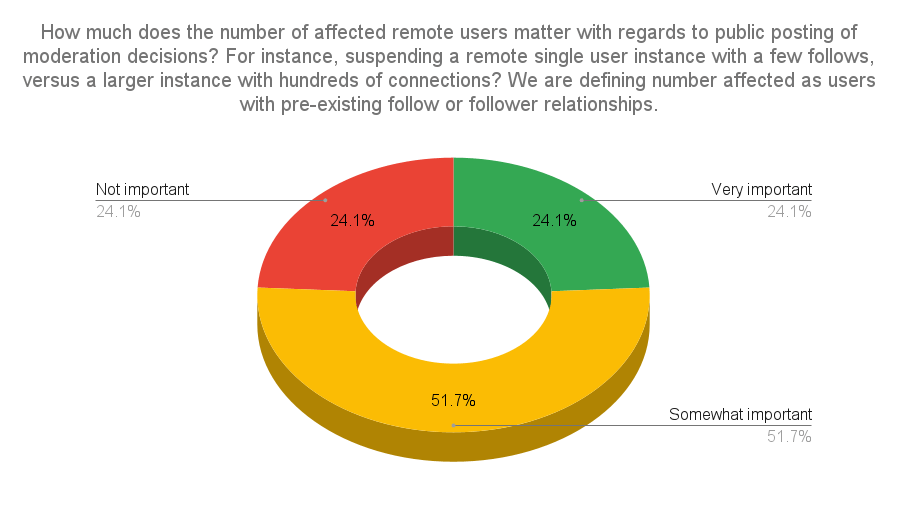

3. User Impact and Timeliness:

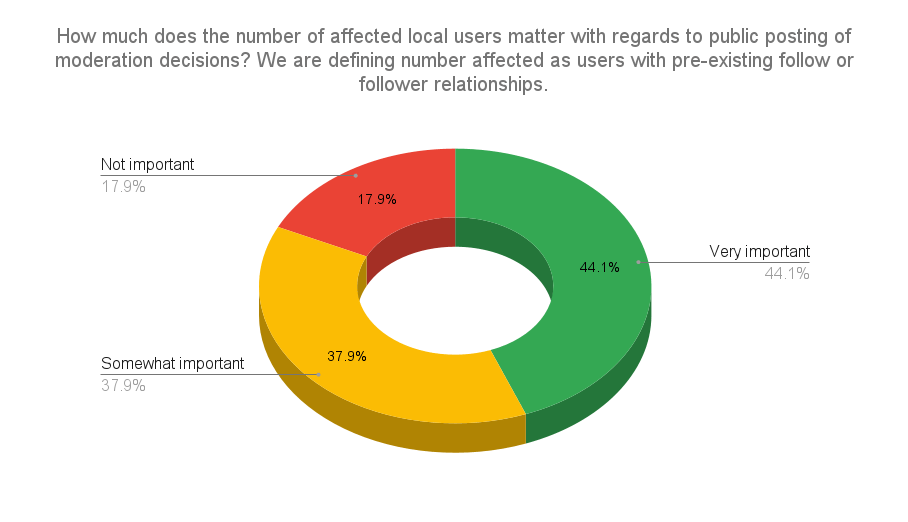

The number of affected local users was deemed more important than the number of affected remote users, reflecting a community-centric perspective.

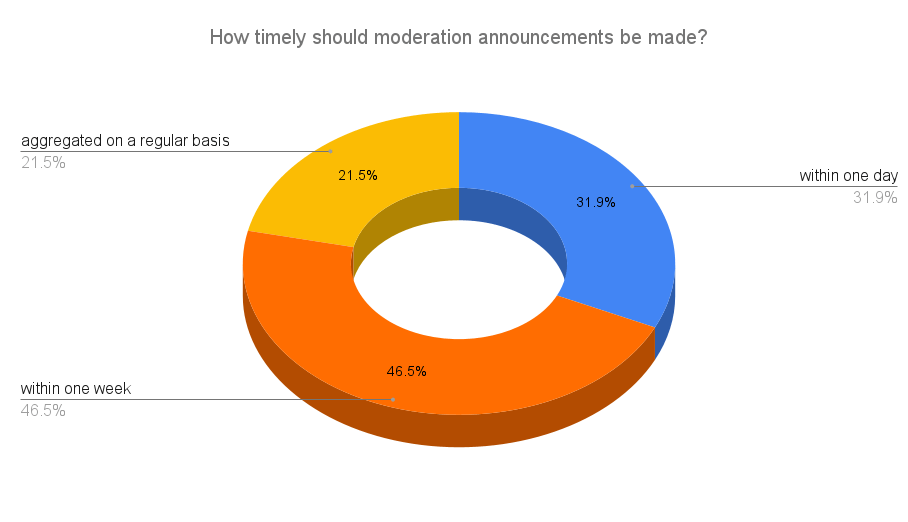

Most respondents preferred moderation decisions to be shared within one week, indicating a balance between timely communication and moderation workload.

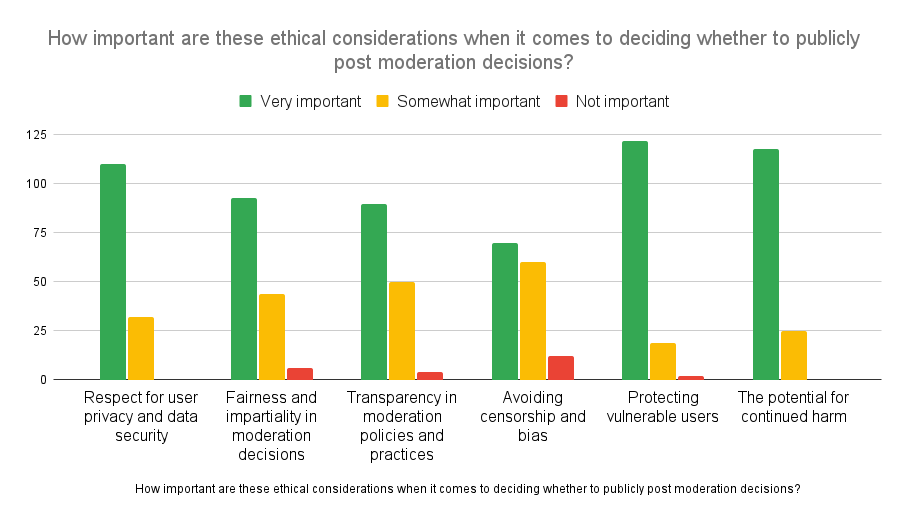

4. Ethical Considerations:

Protecting vulnerable users, user privacy, and avoiding continued harm were paramount ethical concerns for respondents. Fairness, impartiality, and transparency of policies were also very important but ranked slightly lower. All of these considerations were ranked as very important.

There is an inherent challenge here that will be discussed in the Transparency Tensions and Concerns section.

5. Requests for Clarification:

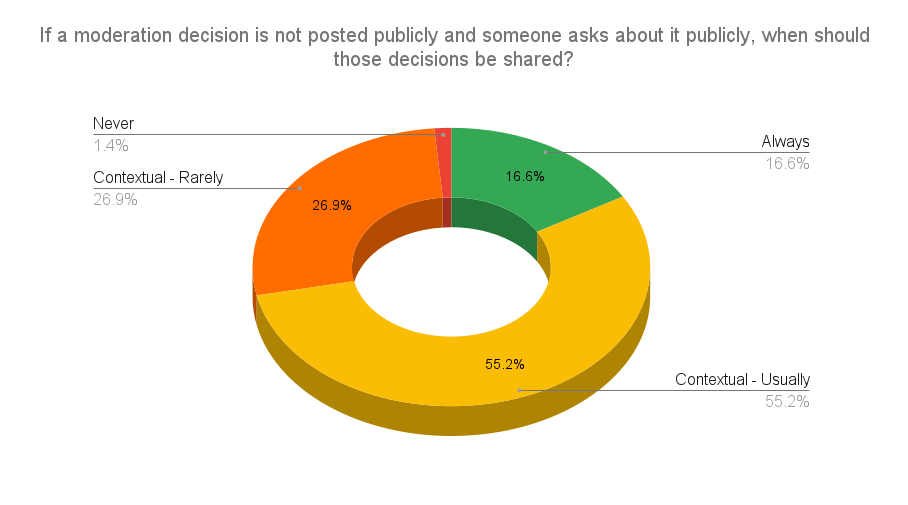

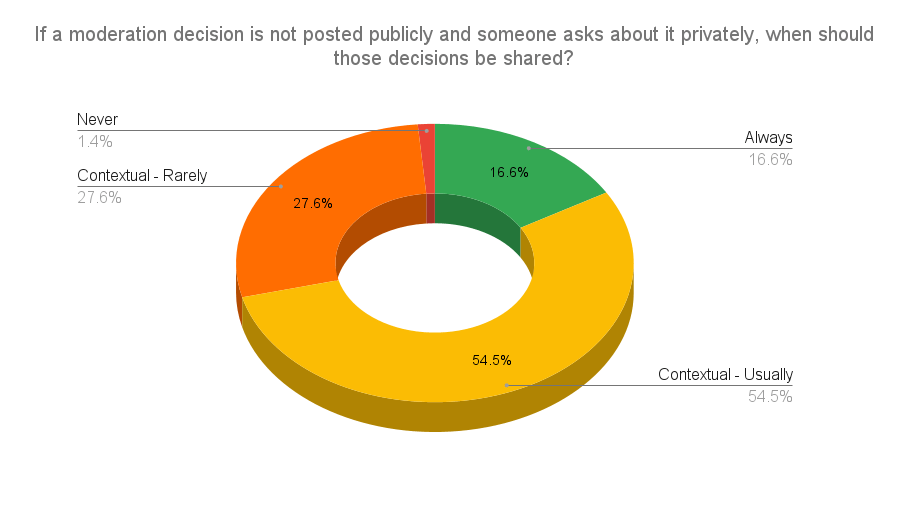

Respondents preferred contextual sharing of moderation decisions rather than automatic disclosure when asked privately or publicly. Still, there was a strong preference for moderation disclosure when asked directly.

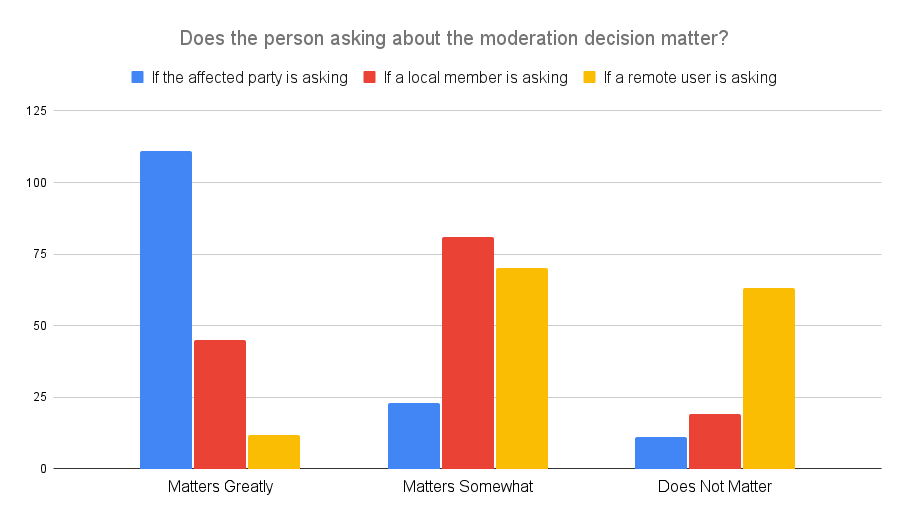

The requester’s identity strongly influenced the concern about sharing details. If the affected party is asking, the expecation is on disclosure. If remote users are asking, the expecation is that requests for disclosure are less valued.

Note that this question was asked without clarifying what an “Affected User” was. Is a local user affected just because the potential for connection has been severed in a suspension? Or does a connection have to already exist to consider a local user affected?

6. Moderator Accountability:

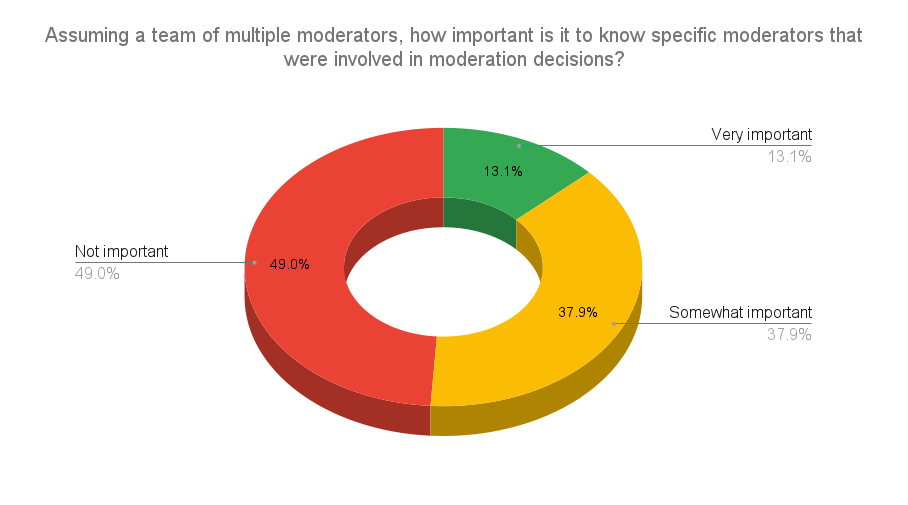

The majority felt it was only somewhat or not important to identify specific moderators responsible for actions, indicating a stronger interest in institutional rather than individual accountability. This would also help to protect individual moderators from reprisal or harassment.

7. Legal Responsibility:

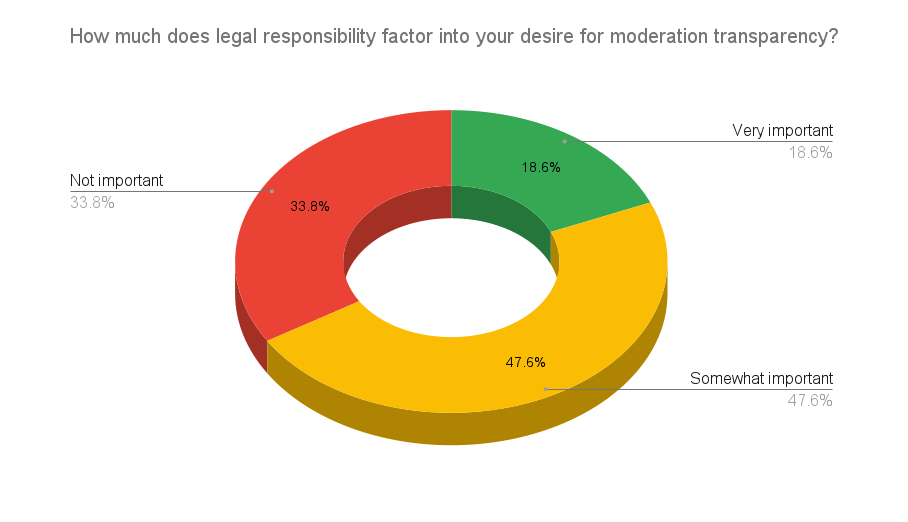

Legal implications of moderation transparency were considered somewhat important by respondents, highlighting awareness but not a primary driver of transparency. Ethical considerations were clearly much more important to respondents than legal responsibility.

8. Data and Appeals:

Aggregated moderation data was considered useful to some extent for transparency.

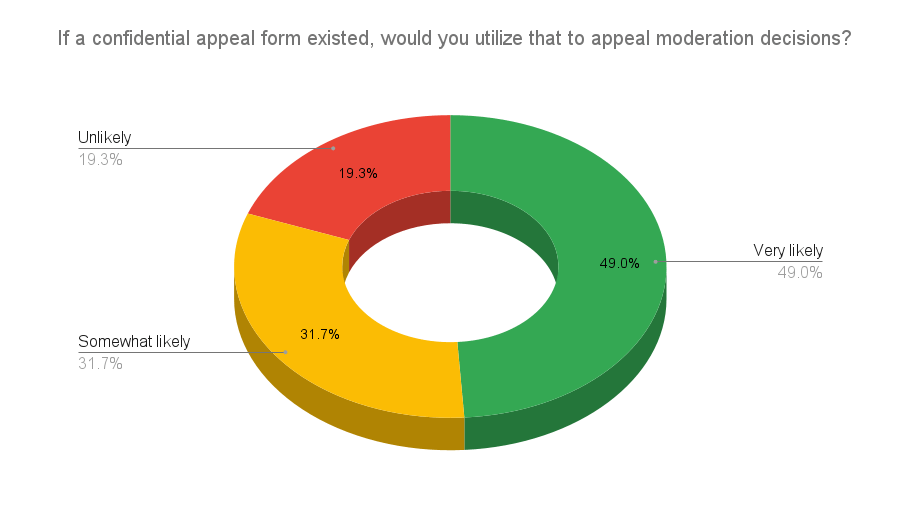

Confidential appeal mechanisms were strongly supported for personal use, less so for intervening on behalf of others.

Interpretation and Insights of Results

- Transparency is heavily valued, especially when moderation actions impact broader fediverse interactions, with a focus on remote instances. This emphasizes the importance of connections between decentralized communities.

- Respondents value a detailed and timely approach to moderation disclosures. There is a strong emphasis on the ethical considerations behind transparency, but less emphasis on legal reporting responsibilities.

- Respondents do not necessarily require immediate announcements, indicating an understanding of moderation as a careful, deliberative process rather than reactionary.

- There is a clear differentiation between levels of transparency required depending on content severity and user impact, highlighting nuanced community expectations. The most striking examples are whether a suspension concerns a remote user versus a remote instance as a whole, and who is asking about moderation decisions that have not been publicly posted.

Transparency Tensions and Concerns

As the results of this survey make clear, moderation transparency is nuanced, and there is no one-size-fits-all answer as to how transparency should work.

There’s an inherent tension between the desire for moderation transparency and the strong emphasis on ethical considerations such as protecting user privacy and vulnerable populations.

The following section on Recommendations for Moderation Reporting are an attempt to address this dichotomy.

Recommendations for Moderation Reporting

Please note that these recommendations are broad and open to adjustments as needed for your community and reporting standards. Not all recommendations will fit for every community or instance, and there may be additional concerns to factor in to your Moderation Reporting Plan.

1. Aggregated or Anonymized Transparency

Instead of detailing individual moderation cases publicly, release aggregated summaries regularly (e.g., monthly or quarterly).

Include the number of actions taken by type (spam, hate speech, etc.) without personally identifiable information.

2. Prioritize Remote Transparency:

Implement standardized protocols for promptly and transparently communicating remote instance moderation actions due to their broader implications.

3. Contextual Communication

Be transparent about the criteria used to determine which moderation decisions are shared publicly and which remain confidential.

Clearly communicate that ethical considerations (privacy, risk of harm, vulnerability) can override general transparency norms.

Formulate clear, publicly-available policies on how moderation decisions will be communicated upon request, explicitly considering the requester’s relationship to the moderation action.

4. Ethical Moderation Framework

Outline ethical guidelines used when making moderation decisions, such as criteria for protecting vulnerable users or minimizing harm, and explain how these criteria shape transparency.

Develop explicit ethical guidelines emphasizing user protection, privacy, fairness, and harm mitigation in moderation policies, and communicate these publicly to build trust.

Use hypothetical or anonymized examples to demonstrate decision-making without exposing users.

5. Differentiated Transparency Levels

Offer different levels of detail based on audience. For instance, provide a basic public summary, with more detailed confidential disclosures available upon inquiry from directly affected individuals.

6. Confidential Appeal Mechanism

Emphasize confidential appeals channels, allowing those impacted to discuss moderation decisions privately and safely, thereby balancing user rights with community transparency expectations.

Clearly outline the process, criteria, and confidentiality assurances.

Select Freeform Responses

In addition to the quantitative data collected in the survey, qualitative data was collected in the form of a freeform response section at the end of the survey. Note that this field was added to the form after approximately 60 responses came in, of the 145 total responses. This means that these responses are not representative of all survey respondents.

The only edits to these shared responses are formatting quotation marks, a note about the location of the survey instance when tech.lgbt is referenced specifically, and exclusion of portions that relate to specific moderation decisions that were more applicable at the time of the survey. Not all responses are included, but a selection to give a general idea of the positions of respondents. These responses are not representative of the views of the tech.lgbt moderation team.

“A lot of moderation depends on context, so having a trusted moderation team who understands policy and culture is much more viable than having strict procedures.”

“I think pretty much all moderation decisions should be transparent. The only exception is that I believe that users/instances suspended for CSAM should not be posted publicly in order to prevent attention being drawn to them.”

“I think that moderators should have a right to keep a decision confidential (or provide reduced information about it) if providing full (or any) information would be sufficiently disturbing to the instance userbase (i.e. if the average user of the instance would need to reach for the metaphorical ‘brain bleach’ after reading an announcement with the standard level of detail for the instance)”

“This is hard: there are goals of providing the service (e.g. following all relevant laws), protecting its users, avoiding fedidrama/burnout of various moderation teams. It’s very likely no optimal compromise can be made with these. There is also the conflict between things needing time to coordinate and calmly specify, while others post in real-time about presumed decisions.”

“Moderation is hard, and I appreciate all that you do. I prefer to treat internet communities (even large servers) as ‘someone’s living room’ - the mods set the standards for what is and isn’t appropriate, and if you don’t like it, go elsewhere.”

“Wellbeing of the moderation team is very important; if transparency turns out to make things worse or causes the moderators to be threatened transparency should be reduced.”

“IMO fedi refuses to understand how it works. I signed up on tech.lgbt [the host instance of this survey] because I trust you with my account and to protect me. I don’t need to be involved or know about every single detail. If I wanted that I would selfhost.”

“When considering what to post transparency wise in the case of violence/threats, its always good to consider what that will entail for the victim as well as the person limited/suspended. As sad as it is, some people are intentionally trying to get suspended/banned as many places as possible, almost like a badge of honor. There are also times where suspending one can cause others to ‘dog pile’ the victim with the blame. I mod/admin several large matrix chat rooms. Moderation is hard, thank you to all mods!”

“Moderation should be almost always be limited to users. Most systems have both serverside and as client features ignore lists for accounts and instances on an individual basis. There is little need for instance admins to interfere with user’s decisions (not) to block someone. There may be a point in preventing ban evasion by shielding users who blocked someone from a new account when the identity is clearly the same. On the other hand doing so proactive may be a burden for the admins and there should be better spam filter like systems in the hands of users. It is important to respect the autonomy of users because things like instance blocks create catch 22 situations when, for example, choosing an instance and either having to choose one who blocks A for reasons you may not agree with (or even without known reasons) or B, but without an option to have an instance that blocks neither. In general many Fediverse instances err on the side of overblocking what is unfortunate for new users.”

“I think defederating other instances should be well reasoned and only a last resort. I’d much prefer just banning specific users, even if they are instance admins. However, I’m all in for defederation when most users on that instance are shitty.”

“if there is a decision to defederate a popular instance (as far tech.lgbt [the host instance of this survey] users are concerned), it definitely makes sense not only to announce the decision, explain the reasons, and give people time to migrate if they choose to”

“re: question 11, I think moderators should function as one unit by consensus, as they do in my server very successfully. in my opinion, it is a failure of the moderation team as a whole if it becomes publicly relevant which individual moderator said or did what”

“Look to Metafilter for an example of good moderation of a largish community including many minoritised groups. Moderators give reasons for comment removal, give people warnings when they are close to the line, and specific people sign off on their decisions. It has worked well for over two decades. Moderation on tech.lgbt [the host instance of this survey] has become opaque and has often felt vindictive.”

“Having done moderation work for a few years myself, albeit on a different type of platform, I do have a very good understanding for the wish to just do the job as best one can and not need to constantly be open to public scrutiny, misunderstanding, misinterpretation, and so on. However, I also live for being as open, honest, and truthful as possible - and I firmly believe that being public about moderation decisions is, at the end of the day, something that keeps the platform/community healthy and honest. If one lays out one’s actions and the intentions of these actions, readily available for whomever wishes to see, then one might foster more open conversations and achieve deeper understandings between those involved as well as onlookers. Aye, there will inevitably be quarrels and complaints - especially from those unwilling to put the proverbial shoes of others on their own feet - but that would always be the case anyway; only in the instance of closed practice, it’d be sans the good.”

“Whether, and in how much detail, a moderation decision should be publicly announced and explained feels very context-dependent to me. But I’ve now had one occasion where an instance was suspended and I was (mildly) affected, and having to rely purely on trust in the moderation team that the suspension was a reasonable course of action was rather unpleasant. So I don’t think a 99% non-disclosure policy of moderation actions is the way to go. If a small group of people can in theory radically change how I experience the fediverse, I want them to have some degree of (collective) accountability, even if I personally trust them. Without more transparency, such accountability can’t really exist.”

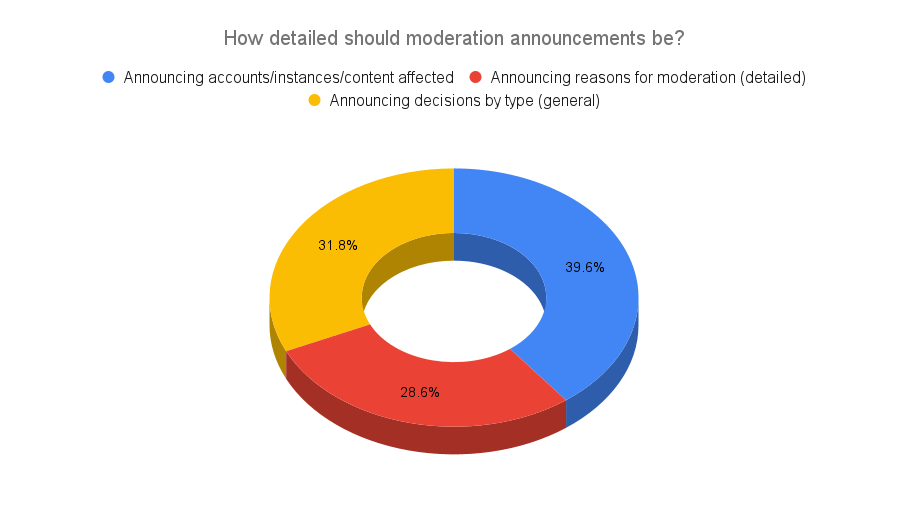

Survey Charts

Raw Data is available at the end of this report. The following charts are intended to visually display the results of the survey for comparison.

.png)

Raw Report Data

The following files contain raw data of survey responses, both for transparency and to allow further data analysis. Notably, the final two questions of the survey are not included: the freeform response section, and the email addresses for respondents who want to be informed of results. Both of these have been excluded for the privacy of respondents.

A spreadsheet of charts created from survey responses is linked as well, for a more visual overview of the survey.

tags: